Microsoft 365 Copilot Data Readiness is essential for secure and effective AI adoption. Copilot is transforming the way we work by boosting productivity, creativity, and efficiency across Microsoft 365. But here is the challenge: Copilot surfaces what users already have access to. If your data governance is not in order, sensitive information could be exposed.

So, the big question is:

Is your data ready for Microsoft 365 Copilot?

I recently joined Mark Thompson from The Inform Team for a webinar on this topic. We explored how Copilot interacts with your Microsoft 365 environment, the risks of oversharing, and how to build a secure, governed foundation for responsible AI adoption.

🎥 Watch the full webinar: YouTube Recording

🔍 Microsoft 365 Copilot Data Readiness: Key Takeaways

🔐 1. Copilot Surfaces What You Already Have Access To

Copilot does not introduce new risks. It simply reveals what users already have access to. If your Teams or SharePoint sites are overly permissive, Copilot will reflect that.

“It used to be like finding a needle in a haystack. Now, with Copilot, it’s like standing outside the haystack with a magnet.” — Nikki Chapple

✅ Action:

- Check for public Teams and SharePoint sites.

- Go to Microsoft 365 admin centre → Teams & groups → Active teams and groups.

- If sensitive sites like HR are public, switch them to private immediately.

- Use container sensitivity labels to automatically govern access permission on Microsoft 365 groups, Teams and sites.

📎 Learn more: How To Use Sensitivity Labels To Control Access To Microsoft Teams, Groups And Sites – Nikki Chapple and How to Add Sensitivity Labels to Microsoft 365 Groups, Teams and Sites

🏷️ 2. Use Sensitivity Labels to Control Access

Copilot respects Microsoft Purview sensitivity labels. It applies the highest priority label to generated content and can be blocked from accessing specific labelled files.

“If a file is labelled ‘Highly Confidential – Block Copilot’, the Copilot icon will be greyed out in Word or PowerPoint.” — Nikki Chapple

✅ Action:

- Create a sub-label specifically for blocking Copilot access (for example, Highly Confidential – Block Copilot).

- Use Data Loss Prevention (DLP) policies to enforce restrictions.

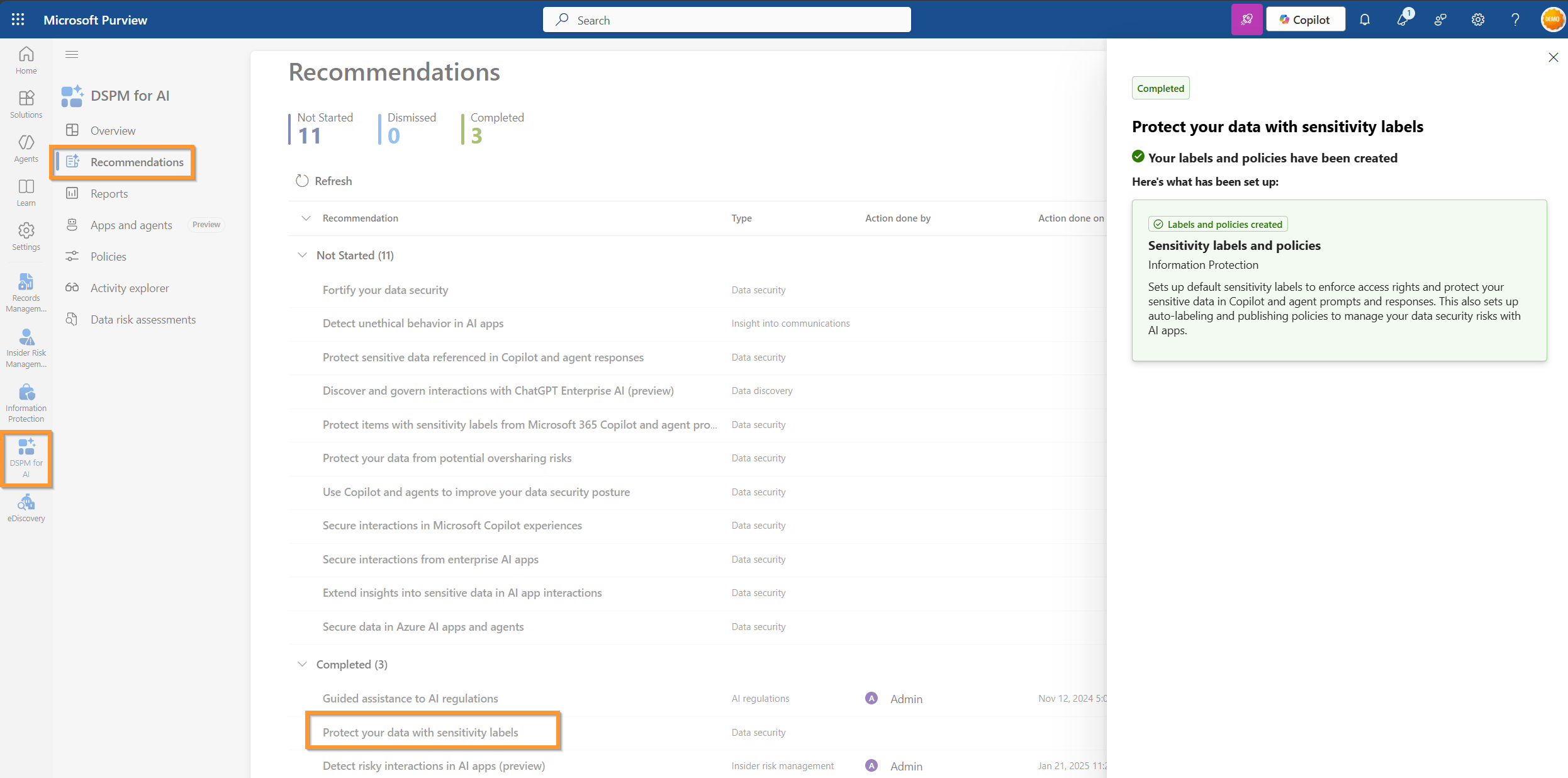

- If you have not started labelling yet, use Microsoft Purview’s Data Security Posture Management for AI > Recommendations to create a baseline set of labels.

📎 Learn more: Microsoft Information Protection – In The Centre Of It All

🚫 3. The Risk of Doing Nothing Is Real

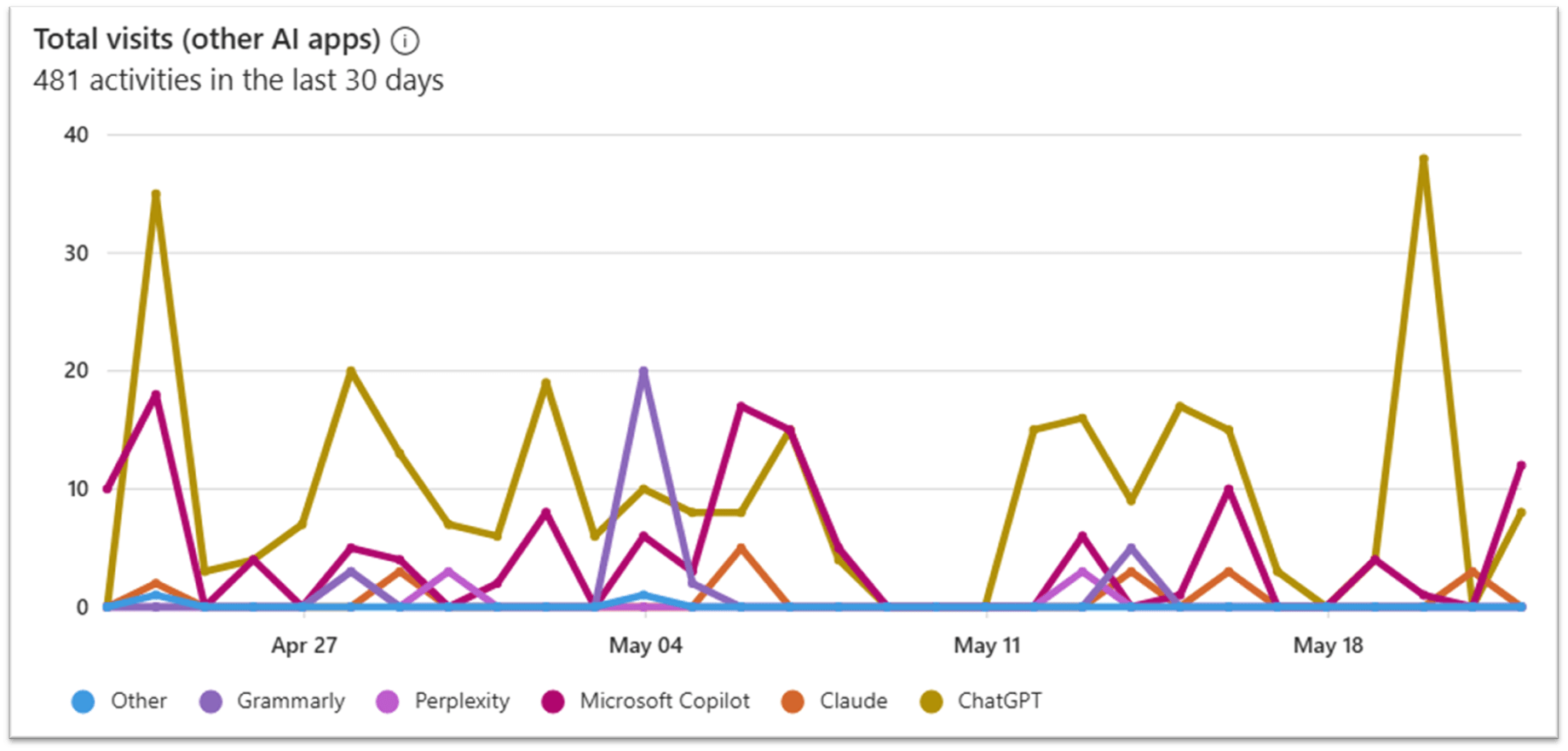

78% of employees are already using GenAI tools like ChatGPT, Gemini, or DeepSeek (Source: AI at Work Is Here. Now Comes the Hard Part – 2024 Work Trend Index Annual Report). If you do not offer a secure, enterprise-ready alternative, users will find their own.

“If you are not blocking other AI tools, it is not a risk. It is already an issue.” — Nikki Chapple

✅ Action:

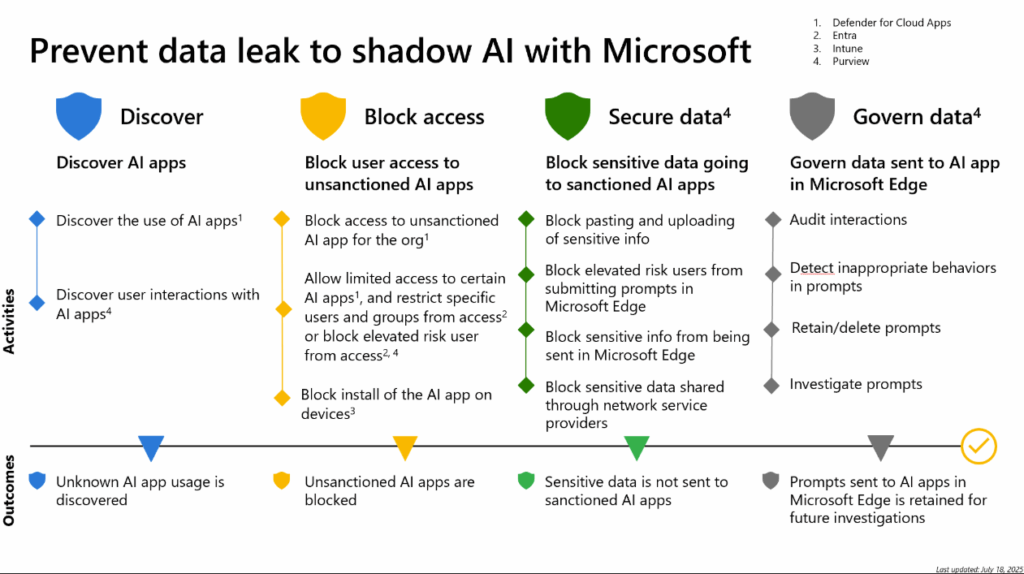

- Apply DLP and IRM policies to prevent sensitive data leaks.

- Use Microsoft Purview DSPM for AI to monitor shadow AI usage.

- Block risky tools via Defender for Cloud Apps or firewall rules.

📎 Read more: Governing AI Shadow IT with Microsoft Purview

📊 4. Monitor and Audit Copilot Usage

Copilot activity is fully auditable. You can track prompts, responses, and file access across Microsoft 365.

“You can use audit logs, DSPM for AI, and Viva Insights to see what is being accessed, by whom, and how it is helping.” — Nikki Chapple

✅ Action:

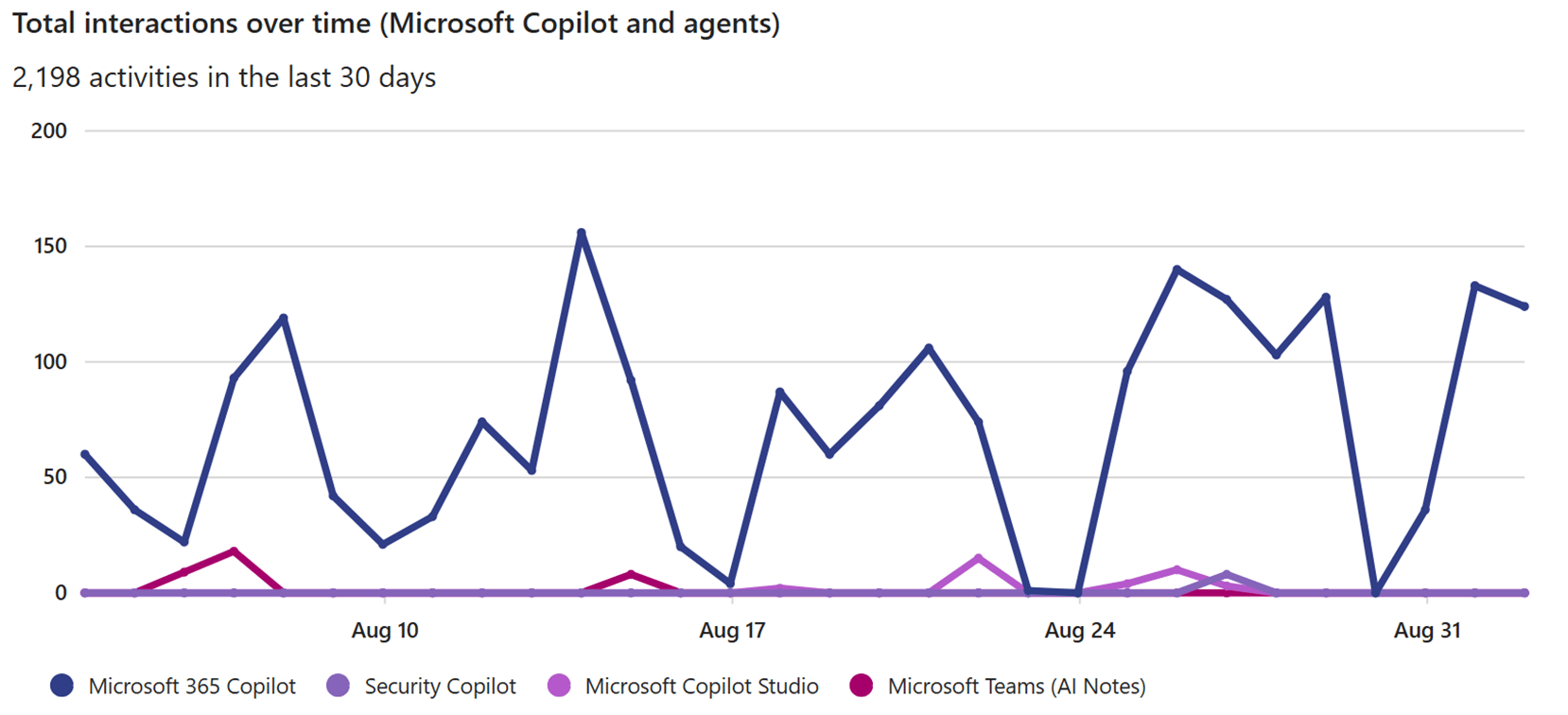

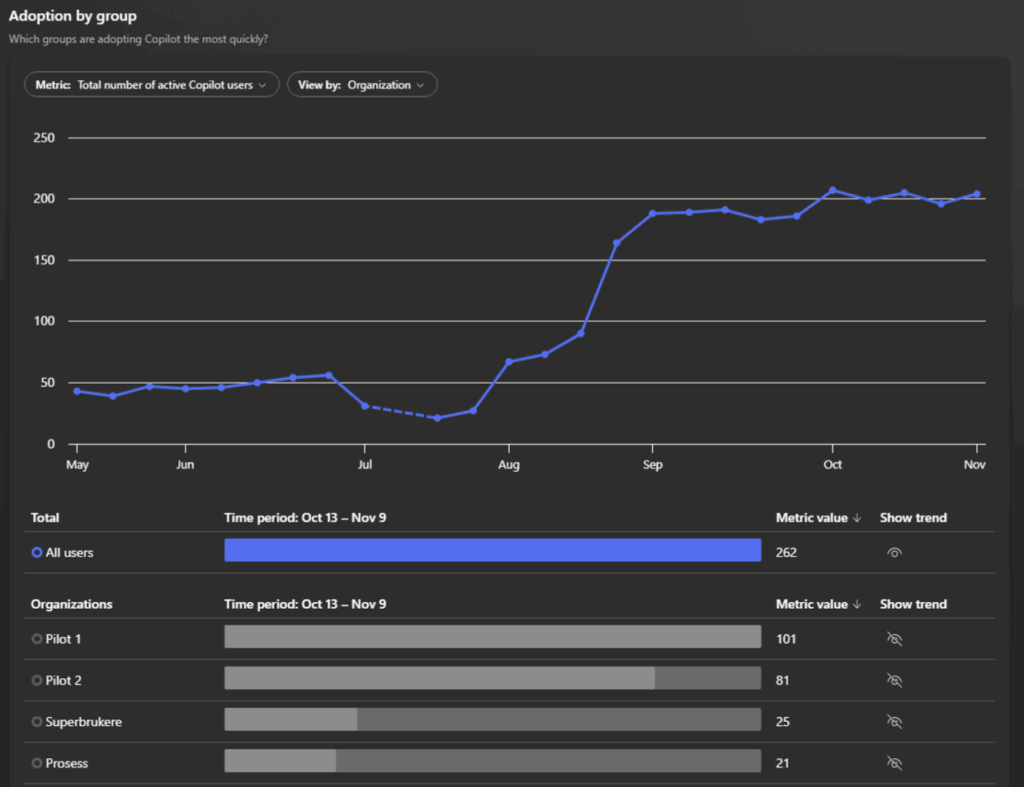

- Use audit logs, DSPM for AI, and Viva Insights to monitor usage.

- Segment users into pilot groups to track adoption trends and measure value.

📎 Explore: Measure Copilot and Gen AI Success and Risks

🧭 5. You Do Not Need Perfect Governance to Start

Waiting for perfect governance can delay adoption and increase risk. Roll out Copilot in phases while improving governance in parallel.

“Copilot does not create new risks. It reveals existing ones. And if it reveals them, you can fix them.” — Mark Thompson

✅ Action:

- Use Microsoft Purview deployment blueprints:

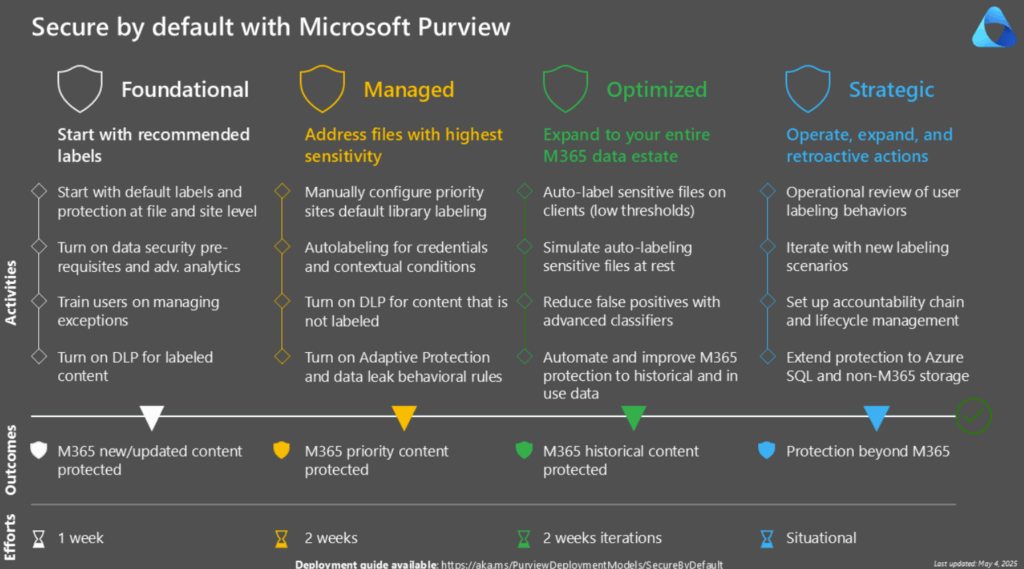

- Secure by Default with Microsoft Purview

- Prevent Data Leakage to Shadow AI

- Address Oversharing in Microsoft 365 Copilot

📎 Read: Microsoft Purview DLP Best Practices

❓ Frequently Asked Questions (FAQs)

Can Copilot access sensitive HR or finance data?

Only if users already have access. Review public Teams and SharePoint sites to prevent unintended exposure.

What if our data is not labelled yet?

Start with new and edited content. Use Microsoft’s Secure by Default blueprint to phase in labelling..

Can we block Copilot from accessing specific files?

Yes. Use sensitivity labels and DLP policies to restrict access to specific content.

How do we monitor Copilot usage?

Use Microsoft Purview DSPM for AI, audit logs, and Viva Insights to track usage and adoption trends.

What is the risk of not deploying Copilot?

Users may turn to public GenAI tools like ChatGPT, increasing the risk of data leaks and regulatory breaches.

Final Thoughts

If you are not blocking other AI tools, it is not a risk—it is already an issue. Start your Copilot readiness journey today by securing your data, applying governance, and educating users.

📌 Resources:

- Watch the full webinar

- Explore more: Microsoft 365 Copilot Governance and Security

💡 Want More Insights? Stay Updated!

🔐 Stay ahead in Microsoft 365 security, compliance, and governance with expert advice and in-depth discussions.

📺 Watch on YouTube:

All Things M365 Compliance – Dive into the latest discussions on Microsoft Purview, data security, governance, and best practices.

🎧 Listen on Spotify:

All Things M365 Compliance – Your go-to resource for deep dives into Microsoft Purview, DLP, Insider Risk Management, and data protection strategies.

📌 Follow Me for More Insights:

- 🔹 LinkedIn: Nikki Chapple – Connect for updates, discussions, and articles.

- 🔹 Bluesky: @nikkichapple – Join the conversation on compliance and data security.

- 🔹 Twitter/X: @chapplenikki – Stay up-to-date with quick insights on M365 security and governance.

📌 Explore More on My Website:

nikkichapple.com – Discover more blog posts, resources, and stay at the forefront of Microsoft 365 compliance and security trends.

💬 Let’s Connect!

Have questions about Microsoft 365 security or compliance? Reach out to me, share your thoughts, or join the conversation! 🚀